> Distributed Inference

Real-time AI. Delivered at global scale.

Instantly deploy, connect, and scale AI workflows anywhere with peak performance, efficiency, and cost savings.

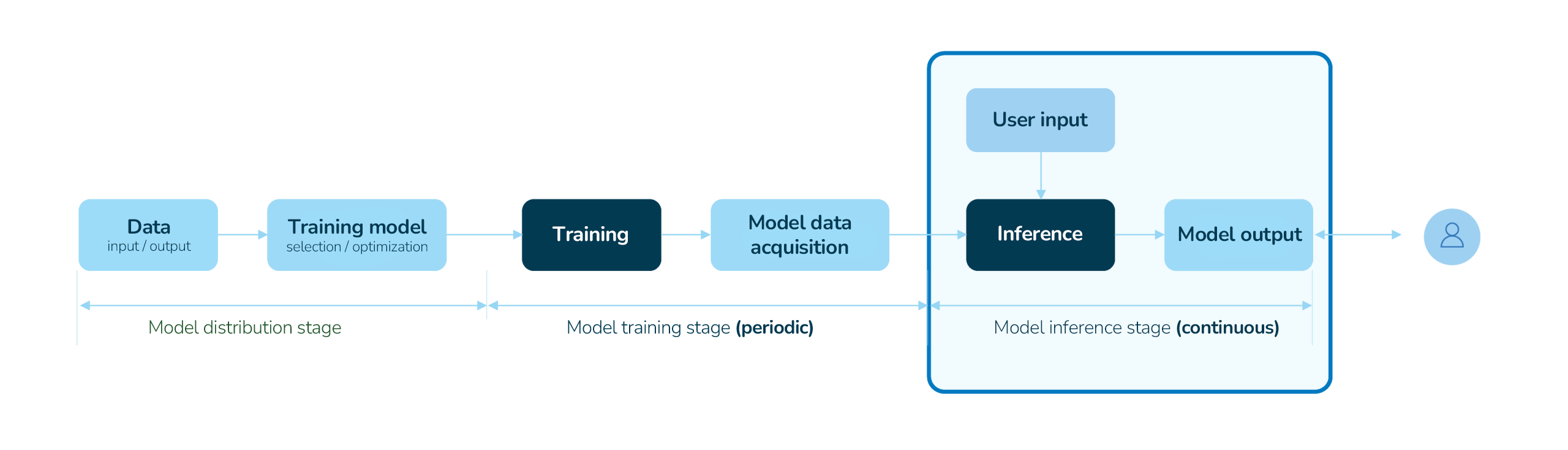

AI applications are only as good as their inference

Proactively optimizing inference costs and performance is critical to achieving real value from AI deployments.

It never stops

Every interaction between the AI and the user triggers inference. With agentic AI, inference evolves from a single response to multi-round reasoning, significantly increasing computational complexity.

It dominates lifetime cost

For most companies using AI, the ongoing cost of running models daily (inference) vastly outweighs the initial training cost, potentially accounting for 80-90% of the total lifetime expense.

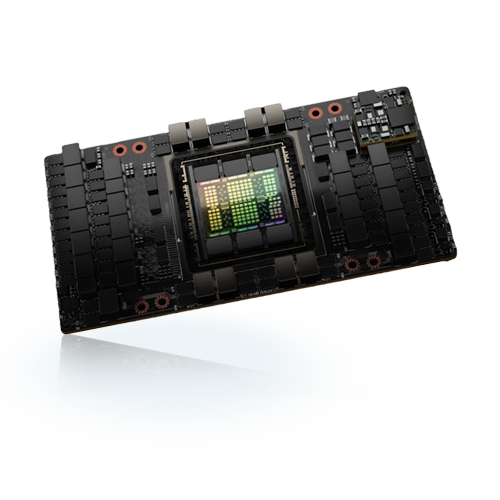

GPU compute built for intelligence

Inference sits at the intersection of performance, cost, and user experience. Running it reliably at global scale demands compute that keeps pace with growing model complexity and real-time demand.

With the right GPU infrastructure in place, teams can deploy models faster, operate more efficiently, and deliver consistent performance wherever users are located.

Scale performance your way

Upgrade your AI stack with top GPUs at competitive prices.

NVIDIA RTX 4090

- Quick prototyping and model development

- Generative image, video, and 3D creation

- Cost-efficient edge inference deployments

NVIDIA H100

- High-speed inference for large models

- Acceleration for LLM and multimodal AI

- Maximum throughput for production AI workloads

NVIDIA H200

- Top-tier inference for large LLMs and embeddings

- Multi-billion parameter generative models

- Optimized for distributed AI scaling

Inference faster anywhere

Pre-installed AI solutions

-

Ollama, Stable Diffusion, and Llama preinstalled

-

Intuitive web UI

Flexible options

- Use native OS and frameworks directly

Robust networking

- Cross-region private connections

- High capacity

- Cost-effective IP transit

Built on our hyperconnected global fabric

AI isn’t just about compute. It’s about the network that powers it.

Speed up global training and inference with ultra-low latency routing, intelligent traffic optimization, and high-capacity connectivity between major AI hubs on our massive, software-defined global private network spanning Asia, the Middle East, Africa, Europe, and the Americas.

Link GPU clusters across continents with L2/L3 private connections to quickly and reliably transfer checkpoints, embeddings, and datasets.

Take your workloads further

AI / machine learning

Accelerate inference of AI/ML models like neural networks

High-performance computing

Unlock computational throughput to perform large-scale calculations

Game streaming + VR

Enable high-quality, immersive gameplay without costly hardware

Accelerate your AI performance worldwide

Connect with our AI experts to discover how Zenlayer Distributed Inference can help you deliver real-time, high-efficiency AI experiences across the globe.