- Solutions

- Products

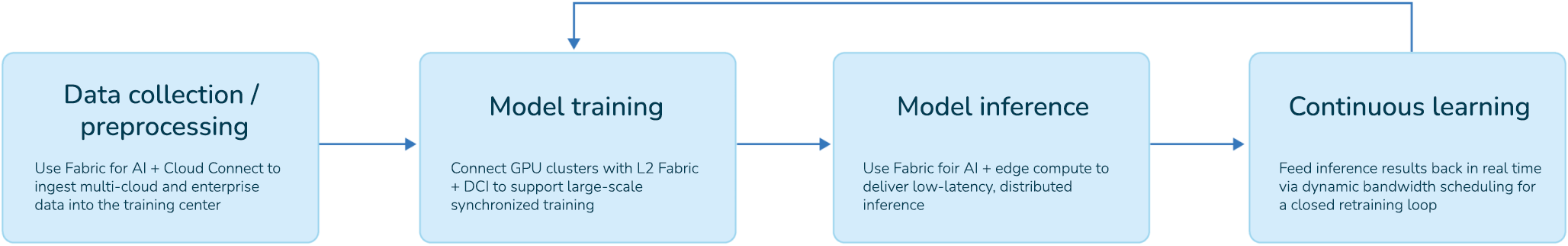

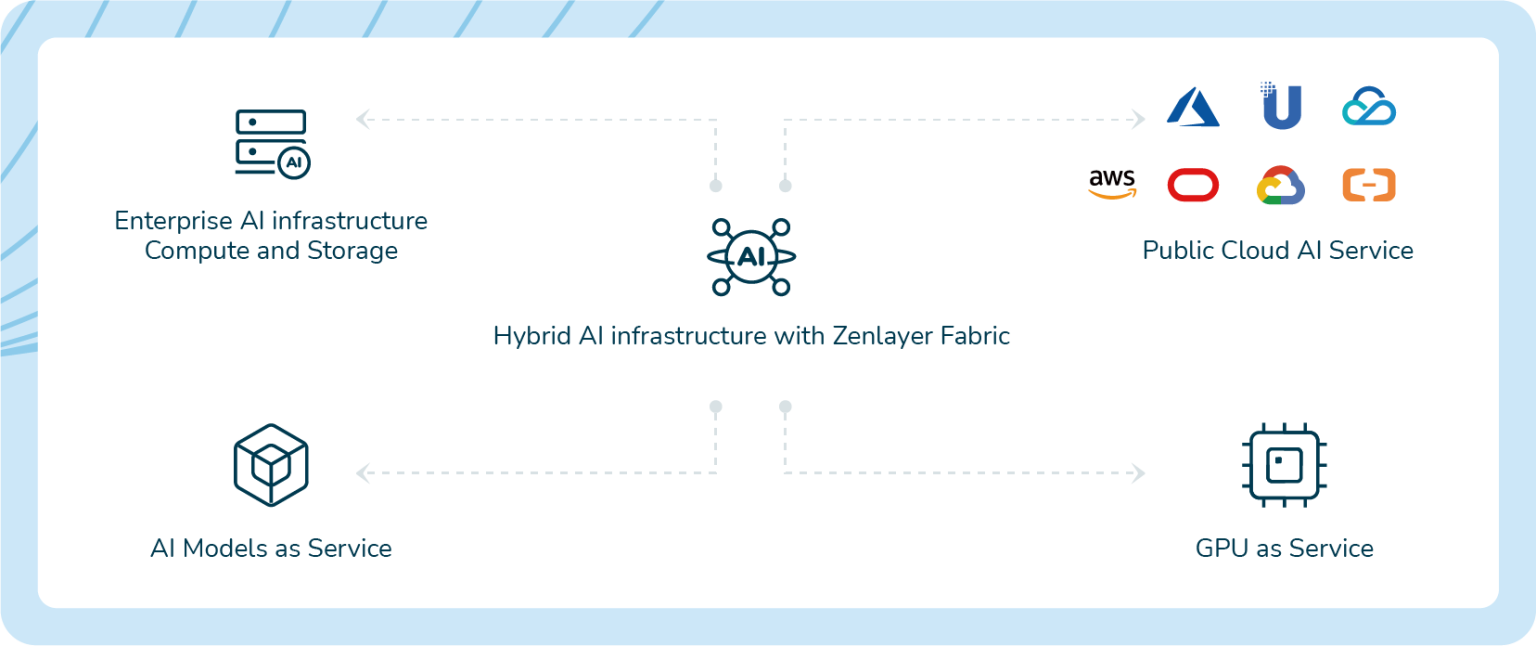

AI

Distributed Inference

Deploy and scale AI anywhere

Fabric for AI

High-speed network for AI connectivity

AI Gateway

One-stop access to global models

Compute

Bare Metal

On-demand dedicated servers

Virtual Machine

Scalable virtual servers

Networking

Cloud Connect

Onramp to public clouds

Cloud Router

Layer 3 mesh network

Private Connect

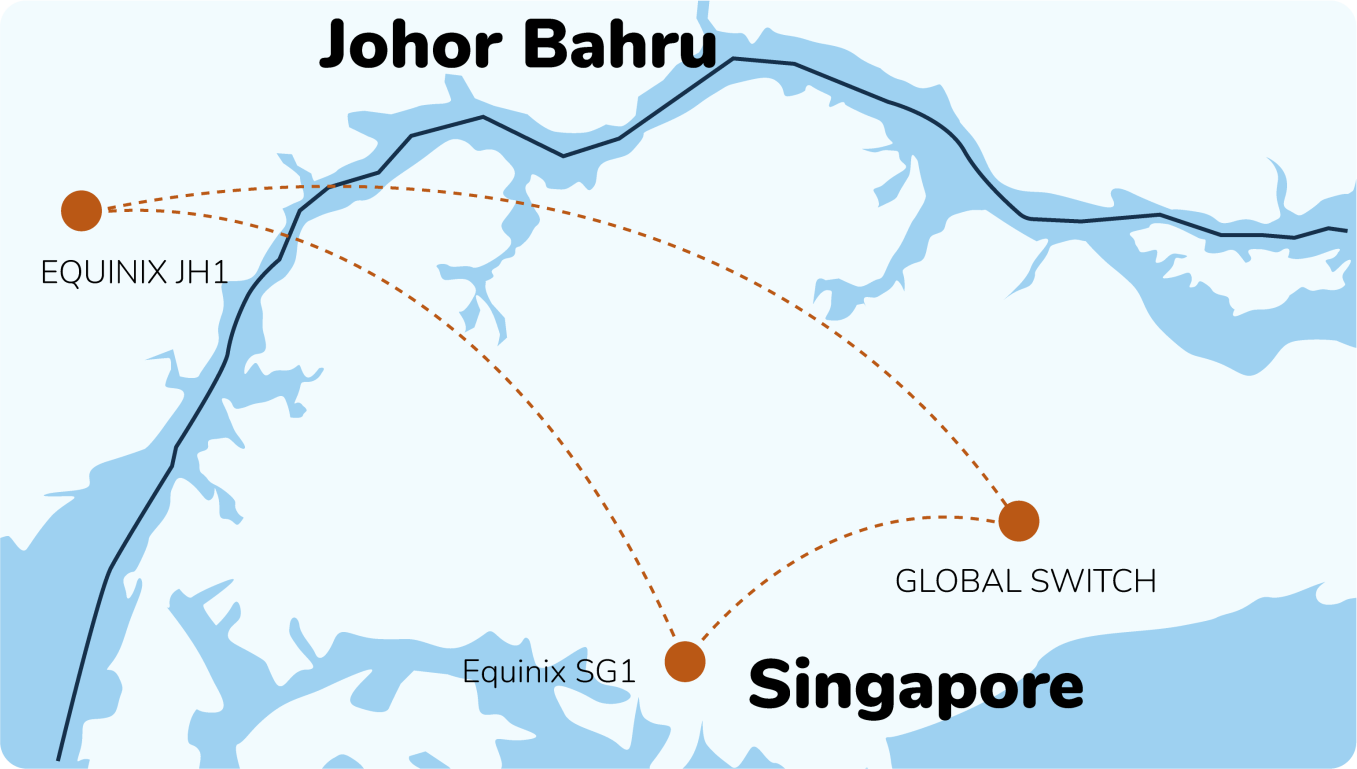

Layer 2 point-to-point connectivity

Virtual Edge

Virtual access gateway to private backbone

IP Transit

High performance internet access

Acceleration

Global Accelerator

Improve application peformance

CDN

Global content delivery network

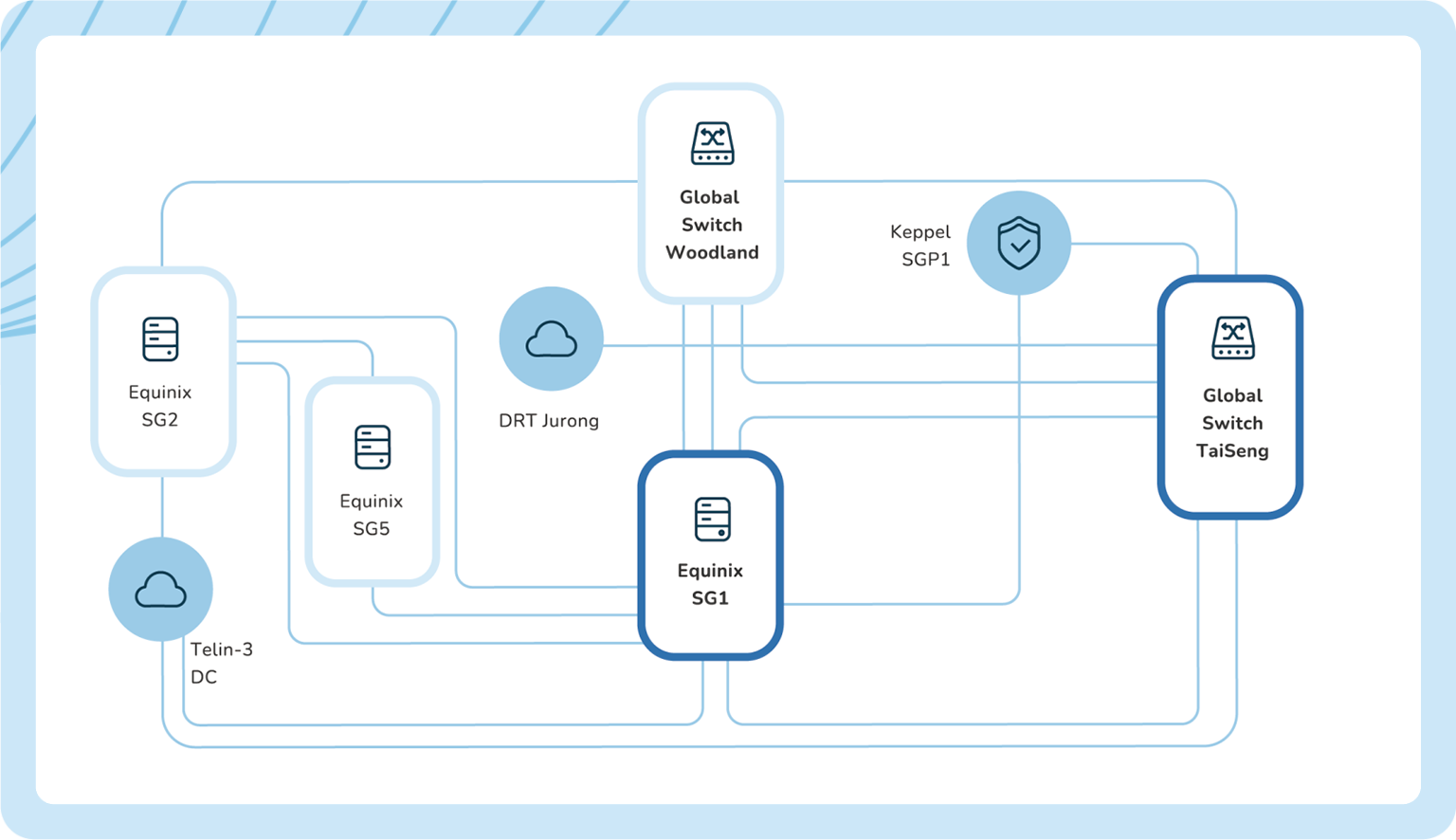

Edge Data Center

Edge colocation

Colocation close to end users

- Global Network

- Partners

- Resources

- Sign in

- Get started