100G servers deliver immense I/O power, but raw speed means little if the network adds delay.

What may seem like a flat, boundless internet is actually a complex, three-dimensional landscape shaped by geography, infrastructure, and policies. In this environment, distance isn’t just a number on a map but a force that directly impacts the speed and performance of our online experiences.

Building on our previous piece, The 100G server revolution – a deep dive into hardware design, this article examines how network latency can bottleneck even the fastest servers. We explore TCP, UDP, and QUIC to show why round-trip time matters and explain how Zenlayer’s edge PoPs, active peering, SDN backbone, and local partnerships keep latency low so you can fully harness 100 Gbps performance.

What is network latency?

Imagine clicking a video link. While you might want and expect it to load instantly, the reality is far more nuanced. The data for that video has to travel a physical path, through a network of cables, routers, and servers. This journey, invisible to our eyes, can be quick and easy or riddled with obstacles that lead to buffering and a frustrating loading spinner stuck on your screen instead of that cat video your friend just sent.

What’s the culprit behind these inconsistencies? Network latency, or the time it takes for a data packet to travel from your device to its destination and back, like a tiny messenger traversing a digital terrain.

We rely on light signals, which are incredibly fast, to transfer data. However, physics comes into play, as the distance the signal travels has a direct impact on latency. The farther the signal needs to travel, the longer it takes the tiny messenger to complete its round trip—hence, higher latency.

But distance isn’t the only player in this game. Local carrier policies, like traffic prioritization, can create bottlenecks that further delay the flow of data. Just like on a real highway, some lanes might have higher speeds while others pose restrictions, impacting how quickly information can reach you.

Consider this monument in downtown Los Angeles, a symbol of its many sister cities around the world. Let’s see how data would travel between this monument and its counterpart in Jakarta.

Image source: https://en.wikipedia.org/wiki/Los_Angeles_Sister_Cities_Monument

In a perfect world with fiber optic cables, light would travel from Los Angeles, to and from Jakarta (roughly 8,977 miles away) in just 0.144 seconds (144 milliseconds). However, reality is far less ideal. The actual layout of submarine cables, the time required for routers to route packets at each hop, and the network capacity of Jakarta’s carriers can all significantly increase this time—up to around 208 milliseconds. This delay makes relying on a server in Los Angeles to serve users in Jakarta impractical.

So, the question becomes: “How much does latency impact bandwidth reliability?”

Let’s take a closer look at network protocols and how we at Zenlayer solve this problem by expanding our infrastructure and actively participating in internet peering at hundreds of our data centers.

The hidden workhorses: Protocols

Beneath the internet’s surface, protocols quietly dictate how data travels, each with its own strengths and weaknesses. The two most commonly used protocols are:

- Transmission Control Protocol (TCP) – ensures reliable data delivery

- User Datagram Protocol (UDP) – prioritizes speed over order

TCP is more often used where data integrity is crucial, even if it means sacrificing some speed. UDP, in contrast, shines in situations that prioritize real-time delivery, even if it comes at the risk of occasional hiccups.

TCP: The reliable runner

Acting like a runner in a relay race where the baton is passed securely from one runner to the next, TCP ensures that data arrives in order. To achieve this, TCP uses a “send and acknowledgement” mechanism. It sends data packets and waits for confirmation from the receiver before sending the next one.

With the latest protocol updates and features, it can also send a bulk of packets together but will wait until the receiver acknowledges. Although this back-and-forth adds some delay, it guarantees reliable data transfer.

Network latency – the time it takes for data to travel – significantly impacts TCP performance. The farther the distance, the longer the wait for acknowledgements. This is especially true when retransmission is required.

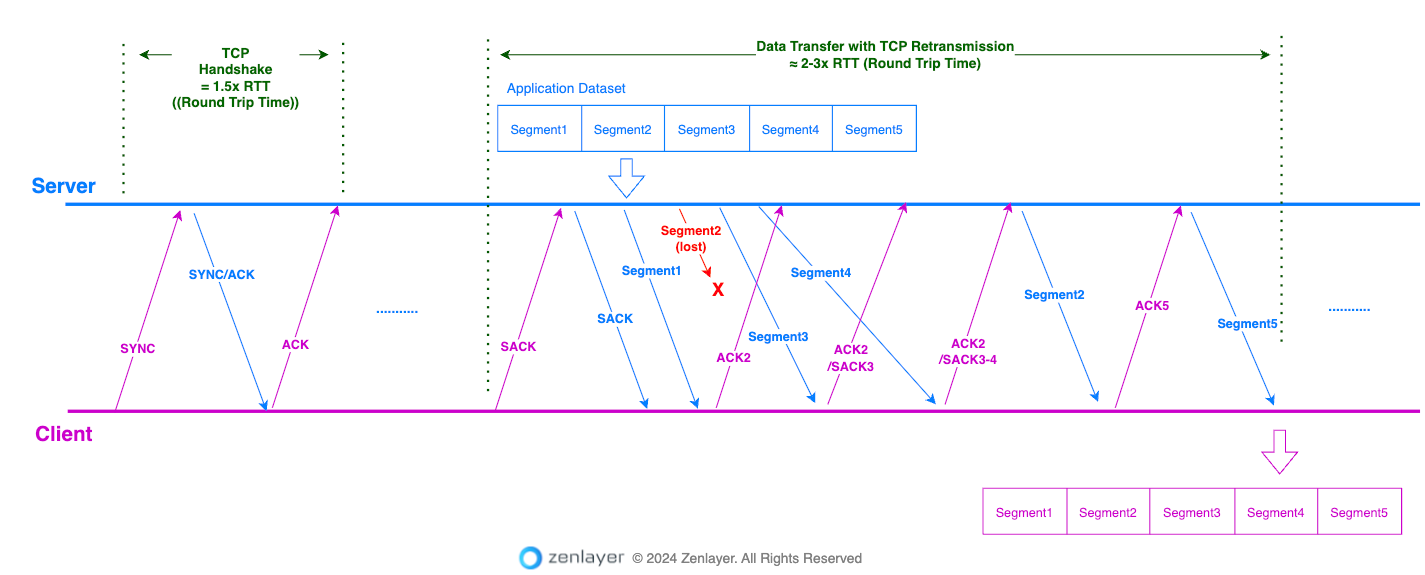

Let’s look into the following diagram demonstrating a TCP connection establishment and data transfer:

The TCP handshake needs 3 steps to establish a connection, which takes approximately 1.5 times the round-trip time (RTT). This handshake is a prerequisite for establishing a data transfer path.

Data transfer phase with segment loss and retransmission:

We’ll assume a commonly used SACK mechanism (defined in RFC 2018) for retransmission during this data transfer phase.

- The first SACK initiated by the client and the SACK acknowledged from the server indicate that both sides agree on using SACK for data transfer.

- The server sends segments 1-4 to the client. Unfortunately, segment #2 is lost.

- The client uses SACK to acknowledge receiving packets 1, 3, and 4. However, it uses ACK 2 to notify the server that segment 2 is missing.

- The server resends segment 2.

- The client receives segments 1-4 and can now request segment 5 by sending out ACK 5.

- The server sends out segment 5.

- With segments 1-5 all received, the client can assemble the complete dataset and get it ready for the application to use.

Calculating throughput based on the example above:

As you can see from the demonstration, this 5-segment data transfer requires around 3 RTTs. Let’s assume that the size of each segment is 1460 bytes, which we’ll use to run the “theoretical” number for the bandwidth-delay effect on this single TCP connection:

- Total data transferred: 5 segments * 1460 bytes/segment = 7300 bytes

- Total bits transferred: 7300 bytes * 8 bits/byte = 58,400 bits

We can calculate the bandwidth based on different RTT values:

RTT = 30 milliseconds (ms):

Bandwidth = 58,400 bits / (3 * 0.03 seconds) = 649 Kbps

RTT = 200 milliseconds (ms):

Bandwidth = 58,400 bits / (3 * 0.2 seconds) = 97 Kbps

Latency vs. throughput

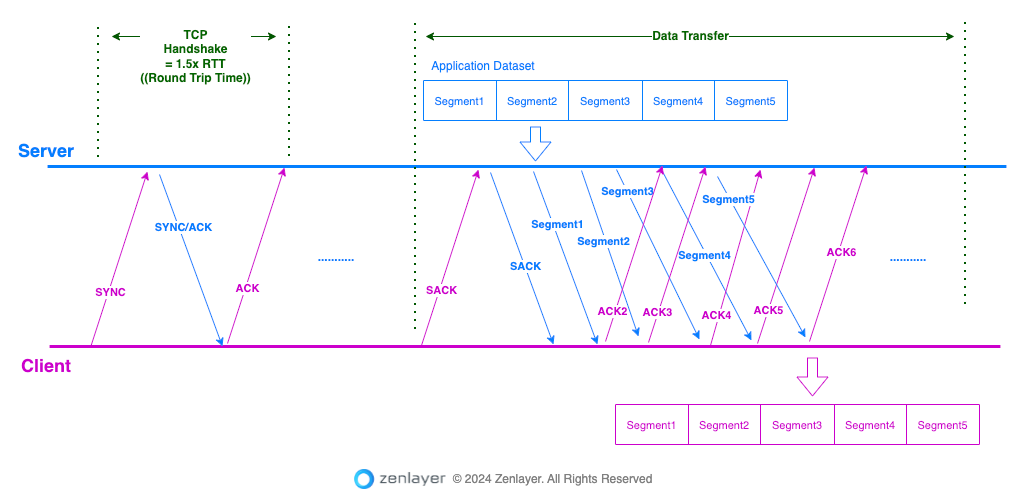

Ideal TCP transmission scenario

The diagram shown earlier in this article outlines a TCP data transfer with retransmission. In an ideal scenario, with a full TCP slide window and continuous acknowledgements from the client, the data transfer resembles a water hose flowing continuously.

As long as the client acknowledges the segments received, the server can send the next ones without waiting. This can significantly reduce the impact of latency on throughput.

In the example diagram below, the server sends segments 1-5 and the client acknowledges all receptions. This continuous flow of sending and acknowledging allows the data transfer to progress smoothly, minimizing the impact of latency.

However, real-world factors introduce limitations. User actions like clicking links, opening new pages, or sending transactions can introduce delays on the application layer. These delays affect how quickly the application dataset is generated and sent to the TCP layer for transmission.

In essence, the bottleneck might shift from the “send and acknowledgement” mechanism to the “user – server application” interaction layer, causing delays even with an ideal TCP transmission scenario.

User Datagram Protocol (UDP)

UDP prioritizes speed over order and reliability. It forgoes the “send and acknowledgement” mechanism used by TCP, making it ideal for low-latency applications where occasional data loss might be acceptable (e.g., live streaming).

However, for applications requiring guaranteed delivery, data loss can be problematic. Additionally, UDP doesn’t relieve the latency impact on the “user – server application” interaction layer.

Quick UDP Internet Connections (QUIC)

QUIC emerges as a promising alternative for latency-sensitive applications. Built upon UDP’s foundation, it inherits its speed advantage by skipping the “send and acknowledgement” mechanism.

However, QUIC goes beyond UDP by incorporating congestion control mechanisms to prevent overwhelming the network and utilizing multiplexing to efficiently transmit multiple data streams simultaneously. These features aim to improve overall efficiency and reduce latency, making QUIC attractive for real-time applications like video calls and online gaming. Additionally, QUIC is being explored for web browsing, with the potential to enhance performance by streamlining data transfer.

It’s important to note that QUIC doesn’t completely eliminate the impact of latency.

While it optimizes data transfer, factors like network congestion and physical distance between sender and receiver can still introduce delays. Furthermore, even though QUIC might offload some reliability aspects to the application layer, it can’t fully address situations requiring heavy user interaction. In such scenarios, user actions themselves might introduce delays on the application layer, similar to what can occur with TCP.

Zenlayer’s edge network for seamless 100G performance

Unlike traditional internet service providers (ISPs) that rely heavily on centralized data centers, Zenlayer prioritizes edge data centers. These strategically located facilities are closer to end users, significantly reducing the physical distance data travels to minimize network latency. This focus on edge networks is particularly beneficial in emerging areas, where running internet services presents several infrastructure challenges:

- Limited existing infrastructure: These regions often lack established infrastructure like fiber optic cables or cell towers. This makes it expensive and time-consuming for telecom providers to build networks, potentially leading to isolation from competitors.

- Mobile-first focus: The widespread adoption of smartphones and affordable data plans has driven a mobile-first approach in many emerging areas. This can lead to a focus on mobile network infrastructure development, leaving fixed-line internet options underdeveloped in some areas.

- Geographical obstacles: Remote or geographically challenging locations like mountains, deserts, or island chains present significant difficulties. Building and maintaining network infrastructure in these areas is expensive and complex, discouraging competition from entering the market.

- Regulatory hurdles: Emerging markets might have complex or unclear regulations regarding telecom services. Dealing with these complexities can be a barrier for established providers.

While emerging regions present significant infrastructure challenges, Zenlayer has a multi-pronged approach to deliver high-performance internet services:

- Extensive network footprint: Zenlayer has built a robust network with over 350 Points of Presence (POPs) strategically located around the globe. This extensive coverage significantly improves data transfer speeds and user experience.

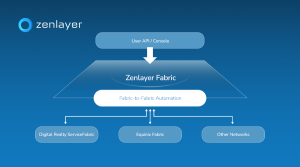

- Active peering: Zenlayer actively participates in internet peering, a process where networks directly exchange traffic without relying on third-party providers. This improves performance by offering shorter paths for data to travel, especially in emerging areas where traditional infrastructure might be limited. By peering with numerous internet service providers and content delivery networks (CDNs), we ensure efficient data routing.

- SDN backbone for agility: We’ve built our own software-defined network (SDN) backbone, which acts as the central nervous system of our overall network. This SDN architecture allows for dynamic routing and flexible management, giving us extra agility to optimize traffic flow and quickly adapt to changing network conditions.

- Local partnerships: We recognize the importance of local partnerships in emerging areas. By working with established local providers, we can leverage their expertise to navigate complex regulatory environments. These partnerships help us overcome local infrastructure challenges and deliver reliable service to end users.

Zenlayer’s powerful combination of extensive network reach, active peering, a robust SDN backbone, and strong local partnerships ensures that 85% of users experience latency below 25 milliseconds. This significantly reduces network delays, allowing you to maximize the potential of your 100G equipment.